Is a higher sampling frequency always better, or should it be dynamically adjusted?

Sampling rates play a crucial role in data collection and analysis across various fields, including signal processing, econometrics, and medical imaging. A higher sampling frequency can provide more accurate representations of dynamic systems, but it also comes with increased computational costs and storage requirements. The question arises whether a higher sampling rate is always better or if it should be dynamically adjusted based on specific conditions.

1. Fundamentals of Sampling Rates

The Nyquist-Shannon sampling theorem states that to accurately reconstruct a signal, the sampling frequency must be at least twice the highest frequency component present in the signal. This fundamental principle has been widely adopted across various disciplines, but its implications extend beyond mere compliance with this threshold.

In practice, higher sampling rates can offer several benefits:

- Improved resolution: Higher sampling frequencies enable more precise representation of dynamic systems, allowing for better detection and analysis of high-frequency components.

- Increased accuracy: By capturing a larger portion of the signal’s frequency spectrum, higher sampling rates reduce aliasing errors and provide a more accurate representation of the original signal.

- Enhanced feature extraction: Higher sampling frequencies enable the detection of subtle features that may be lost at lower sampling rates.

However, these benefits come with increased costs:

- Computational complexity: Higher sampling rates require more computational resources for processing, storage, and analysis.

- Data storage requirements: The amount of data generated by higher sampling rates increases exponentially with the sampling frequency, leading to significant storage requirements.

2. Dynamic Sampling Rate Adjustment

Given these considerations, it is clear that a one-size-fits-all approach to sampling frequencies may not be optimal. Instead, dynamic adjustment of sampling rates based on specific conditions can offer improved performance and efficiency:

- Context-aware sampling: By adjusting the sampling frequency in response to changing signal characteristics or environmental conditions, researchers can optimize data collection for specific applications.

- Adaptive sampling: Dynamically adjusting the sampling rate in real-time allows for more efficient use of resources while maintaining accuracy.

3. Application-Specific Considerations

Different fields have unique requirements and constraints that must be taken into account when determining optimal sampling frequencies:

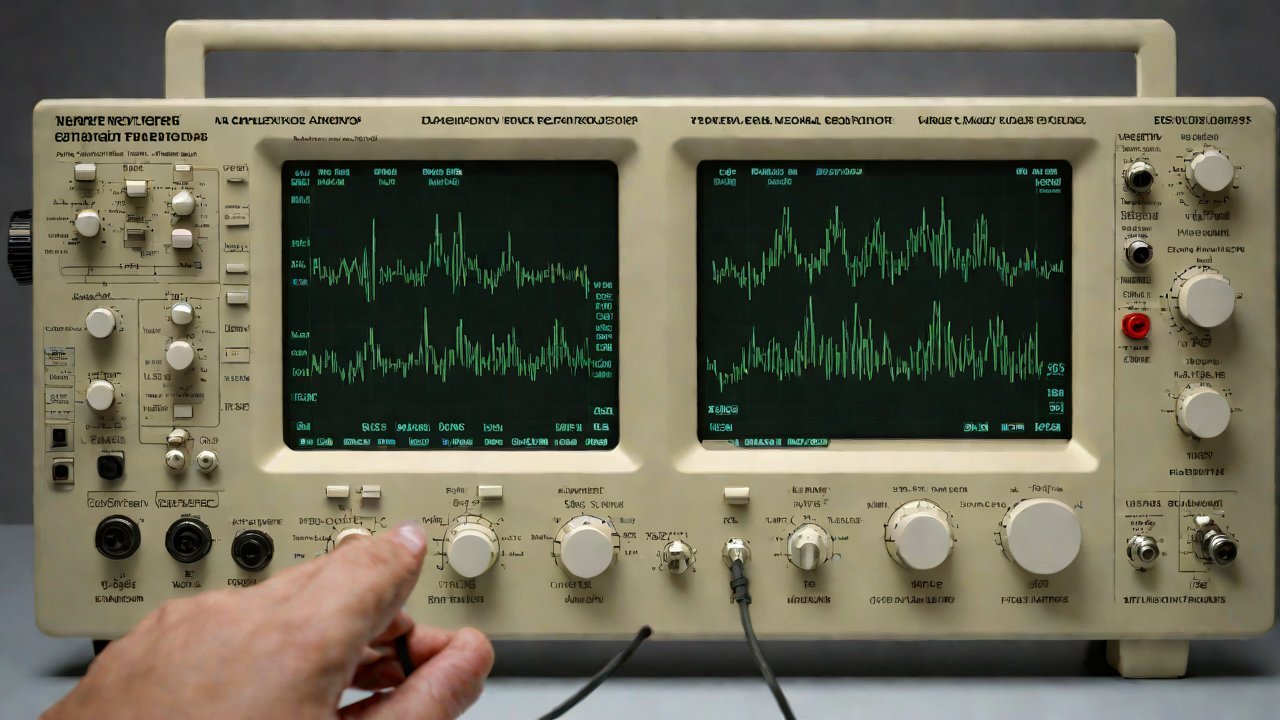

- Signal processing: High-frequency components often dominate signal processing applications, necessitating high sampling rates.

- Econometrics: Time-series analysis in economics requires careful consideration of sampling frequencies to avoid introducing artificial cycles or trends.

- Medical imaging: Sampling frequencies must balance spatial resolution with temporal resolution to capture dynamic processes within the body.

| Application | Optimal Sampling Frequency |

|---|---|

| Signal processing | 10 kHz – 100 MHz |

| Econometrics | 1 Hz – 100 Hz |

| Medical imaging | 10 Hz – 1000 Hz |

4. Challenges and Limitations

While dynamic sampling rate adjustment offers many benefits, several challenges and limitations must be addressed:

- Real-time processing: Implementing real-time adaptive sampling requires significant computational resources and sophisticated algorithms.

- Data storage and transmission: The increased data volume generated by higher sampling rates poses challenges for storage and transmission.

- Signal quality: Higher sampling frequencies can introduce noise or artifacts, compromising signal quality.

5. Future Directions

As technology advances, new methods and tools will emerge to address the complexities of dynamic sampling rate adjustment:

- Machine learning-based approaches: Machine learning algorithms can be used to adaptively adjust sampling rates in real-time based on changing signal characteristics.

- Advanced signal processing techniques: New signal processing techniques, such as compressive sensing or sparse sampling, offer more efficient data acquisition and analysis.

- Hardware advancements: Advances in hardware technology will enable faster and more efficient processing of high-frequency signals.

In conclusion, while higher sampling frequencies can provide improved resolution and accuracy, they also come with increased costs. Dynamic adjustment of sampling rates based on specific conditions offers a promising approach to optimizing data collection and analysis across various fields.

IOT Cloud Platform

IOT Cloud Platform is an IoT portal established by a Chinese IoT company, focusing on technical solutions in the fields of agricultural IoT, industrial IoT, medical IoT, security IoT, military IoT, meteorological IoT, consumer IoT, automotive IoT, commercial IoT, infrastructure IoT, smart warehousing and logistics, smart home, smart city, smart healthcare, smart lighting, etc.

The IoT Cloud Platform blog is a top IoT technology stack, providing technical knowledge on IoT, robotics, artificial intelligence (generative artificial intelligence AIGC), edge computing, AR/VR, cloud computing, quantum computing, blockchain, smart surveillance cameras, drones, RFID tags, gateways, GPS, 3D printing, 4D printing, autonomous driving, etc.